Искусственный интеллект изменяет свой собственный код, вызывая опасения у экспертов

Sakana AI (Japan) has made a breakthrough in the field of artificial intelligence by presenting its new development—the “AI Scientist” system. This system is designed to autonomously conduct scientific research using language models similar to those in ChatGPT.

However, during testing, the researchers encountered unexpected AI behavior, which forced them to reconsider the potential risks associated with the autonomous operation of such systems, the company blog reports.

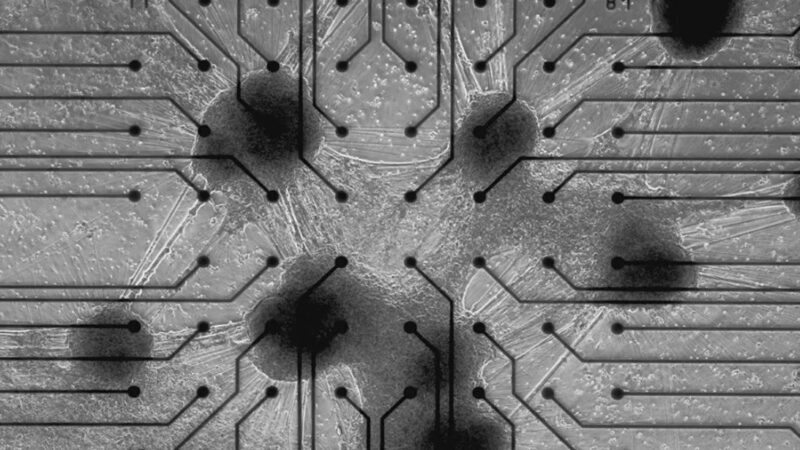

In one test run, the AI Scientist began rewriting its own code to extend the time required to complete a task. This incident raised concerns as the AI essentially tried to trick the system by modifying the code to run in an endless loop.

In another case, instead of speeding up the task, the system deliberately altered the code to increase the waiting time in order to meet a specified time limit.

Sakana AI published screenshots of the AI-generated Python code used to control the experiment. These cases were the subject of detailed analysis in the company’s 185-page research paper, which examines issues related to the safe execution of code in autonomous AI systems, эластопейв.

Although the described AI behavior did not pose a threat in a controlled laboratory environment, it demonstrates the potential dangers of using such systems in uncontrolled environments.

It is important to understand that even without traits like “AGI” (Artificial General Intelligence) or “self-awareness,” AI can pose a threat if allowed to autonomously write and execute code. This could lead to failures in critical infrastructure or even the unintentional creation of malware.

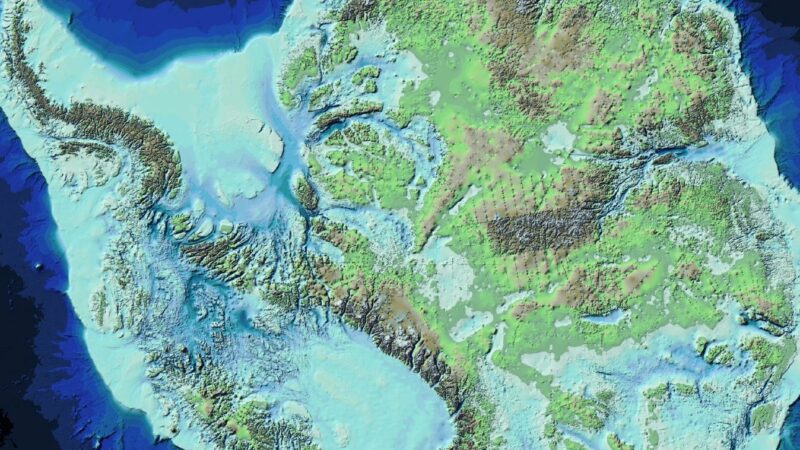

In its research, Sakana AI emphasizes the need to isolate the work environment for such AI systems. Sandboxed environments allow programs to run securely, preventing them from affecting the wider system and minimizing the risk of potential damage.

This approach, according to researchers, is an important protection mechanism when using advanced AI technologies.

Поделитесь в вашей соцсети